Spring boot + Spring batch を試す

こんにちわ、猫好きリーマンのほげPGです。今回は Spring boot +Sprinb batchを試してみます。

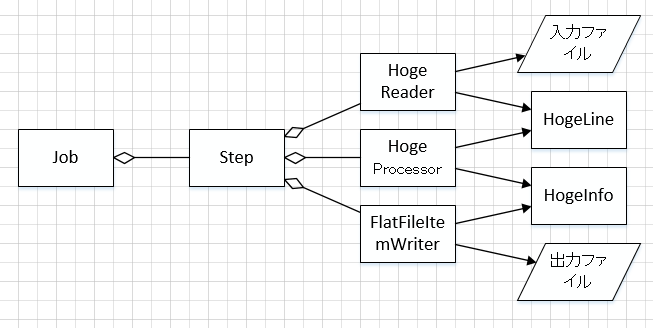

1、モジュールの構成

メインは上記構成を構築するHogeCongiとなります。

2、プロジェクト

pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>sample</groupId>

<artifactId>hoge-spring-batch</artifactId>

<packaging>jar</packaging>

<version>1.0.0-SNAPSHOT</version>

<name>hogeSpringbatch</name>

<url>http://maven.apache.org</url>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.2.1.RELEASE</version>

</parent>

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-batch</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>com.h2database</groupId>

<artifactId>h2</artifactId>

</dependency>

</dependencies>

<build>

<finalName>hoge</finalName>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

HogeApp.java

/**

* ほげ.

*/

@EnableAutoConfiguration

@ComponentScan

@Slf4j

public class HogeApp {

public static void main(String[] args) throws Exception {

log.info("start... {}", String.join(", ", args));

ApplicationContext context = SpringApplication.run(HogeApp.class, args);

int exitCode = SpringApplication.exit(context);

log.info("exit... {}", exitCode);

System.exit(exitCode);

}

}

HogeConfig.java

@Configuration

@EnableBatchProcessing

@Slf4j

public class HogeConfig {

@Bean

DefaultBatchConfigurer batchConfigurer() {

return new DefaultBatchConfigurer() {

private JobRepository jobRepository;

private JobExplorer jobExplorer;

private JobLauncher jobLauncher;

@PostConstruct

private void init() throws Exception {

MapJobRepositoryFactoryBean jobRepositoryFactory = new MapJobRepositoryFactoryBean();

jobRepository = jobRepositoryFactory.getObject();

MapJobExplorerFactoryBean jobExplorerFactory = new MapJobExplorerFactoryBean(jobRepositoryFactory);

jobExplorer = jobExplorerFactory.getObject();

SimpleJobLauncher simpleJobLauncher = new SimpleJobLauncher();

simpleJobLauncher.setJobRepository(jobRepository);

simpleJobLauncher.afterPropertiesSet();

jobLauncher = simpleJobLauncher;

}

@Override

public JobRepository getJobRepository() {

return jobRepository;

}

@Override

public JobExplorer getJobExplorer() {

return jobExplorer;

}

@Override

public JobLauncher getJobLauncher() {

return jobLauncher;

}

};

}

@Autowired

private JobBuilderFactory jobBuilderFactory;

@Autowired

private StepBuilderFactory stepBuilderFactory;

@Value("${hoge.chunk:1}")

private int chunk;

@Bean

public Job job() throws IOException {

log.debug("called.");

return jobBuilderFactory.get("job1")

.incrementer(new RunIdIncrementer())

.listener(listener())

.start(step())

.build();

}

@Bean

public JobExecutionListener listener() {

return new JobListener();

}

@Bean

public Step step() throws IOException {

log.debug("called.");

return stepBuilderFactory.get("step")

.<HogeLine, HogeInfo> chunk(chunk)

.reader(reader(null))

.processor(processor())

.writer(writer(null))

.faultTolerant()

.skip(Exception.class)

.skipLimit(Integer.MAX_VALUE)

.build();

}

@Bean

@StepScope

public HogeReader reader(@Value("#{jobParameters[in]}") String fileName) throws IOException {

return new HogeReader(fileName);

}

@Bean

@StepScope

public HogeProcessor processor() {

return new HogeProcessor();

}

@Bean

@StepScope

public FlatFileItemWriter<HogeInfo> writer(@Value("#{jobParameters[out]}") String filename) {

FlatFileItemWriter<HogeInfo> writer = new FlatFileItemWriter<>();

writer.setResource(new FileSystemResource(filename));

writer.setLineAggregator(item -> {

StringBuilder sb = new StringBuilder();

sb.append(item.getName());

sb.append("-");

sb.append(item.getHoge());

sb.append(item.getHoge());

return sb.toString();

});

return writer;

}

補足)

起動パラメータで入力ファイル、出力ファイルのファイル名を指定するようにしています。

HogeReader.java

@Slf4j

public class HogeReader implements ItemReader<HogeLine> {

String fileName;

int lineNo = 0;

BufferedReader fr;

public HogeReader(String fn) throws IOException {

log.debug("called. {}", fn);

this.fileName = fn;

fr = new BufferedReader(new FileReader(fileName));

}

@Override

public HogeLine read() throws IOException {

log.debug("called. {}", lineNo);

String line = fr.readLine();

if (line == null) return null;

lineNo++;

return new HogeLine(line);

}

@PreDestroy

public void close() throws IOException {

log.debug("called.");

fr.close();

}

}

HogeProcessor.java

@Slf4j

public class HogeProcessor implements ItemProcessor<HogeLine, HogeInfo> {

public HogeProcessor() {

log.debug("called.");

}

@Override

public HogeInfo process(HogeLine line) throws Exception {

log.debug("called. {}", line);

String[] cols = line.getLine().split(",");

return new HogeInfo(cols[0], cols[1]);

}

}

HogeLine.java

@Value

public class HogeLine {

String line;

}

HogeInfo.java

@Value

public class HogeInfo {

String name;

String hoge;

}

application.yml

spring.main:

# 起動バナーなし

banner-mode: "off"

# 組み込みWebサーバの自動起動無効

web-application-type: none

# 起動スクリプト無効

spring.batch.initialize-schema: never

# ログ

logging:

pattern:

console: "%d{HH:mm:ss.SSS} %thread %-5level \\(%file:%line\\) %M - %msg%n"

level:

ROOT: INFO

jp.co.ois.sample: DEBUG

# ほげ

hoge.chunk: 2

In.csv

abc,hoge def,moge hoge,HOGE!

起動確認

> mvn clean package

> java -jar target/hoge.jar in=in.csv out=out.csv

14:25:30.170 [main] INFO jp.co.ois.sample.batch.HogeApp - start... in=in.csv, out=out.csv

14:25:31.188 main INFO (StartupInfoLogger.java:55) logStarting - Starting HogeApp v1.0.0-SNAPSHOT on NDYWM7A3420152 with PID 4344 (C:\work\masuda\myrepo\hogeSpringbatch\target\hoge.jar started by horqu in C:\work\masuda\myrepo\hogeSpringbatch)

14:25:31.189 main DEBUG (StartupInfoLogger.java:56) logStarting - Running with Spring Boot v2.2.1.RELEASE, Spring v5.2.1.RELEASE

14:25:31.190 main INFO (SpringApplication.java:651) logStartupProfileInfo - No active profile set, falling back to default profiles: default

14:25:32.211 main WARN (DefaultBatchConfigurer.java:63) setDataSource - No transaction manager was provided, using a DataSourceTransactionManager

14:25:32.236 main INFO (HikariDataSource.java:110) getConnection - HikariPool-1 - Starting...

14:25:32.639 main INFO (HikariDataSource.java:123) getConnection - HikariPool-1 - Start completed.

14:25:32.653 main INFO (JobRepositoryFactoryBean.java:184) afterPropertiesSet - No database type set, using meta data indicating: H2

14:25:32.894 main INFO (SimpleJobLauncher.java:209) afterPropertiesSet - No TaskExecutor has been set, defaulting to synchronous executor.

14:25:32.910 main INFO (SimpleJobLauncher.java:209) afterPropertiesSet - No TaskExecutor has been set, defaulting to synchronous executor.

14:25:32.912 main DEBUG (HogeConfig.java:84) job - called.

14:25:32.917 main DEBUG (HogeConfig.java:99) step - called.

14:25:33.212 main INFO (StartupInfoLogger.java:61) logStarted - Started HogeApp in 2.835 seconds (JVM running for 3.691)

14:25:33.213 main INFO (JobLauncherCommandLineRunner.java:147) run - Running default command line with: [in=in.csv, out=out.csv]

14:25:33.263 main INFO (SimpleJobLauncher.java:145) run - Job: [SimpleJob: [name=job1]] launched with the following parameters: [{run.id=1, in=in.csv, out=out.csv}]

14:25:33.309 main DEBUG (JobListener.java:16) beforeJob - called. JobExecution: id=0, version=1, startTime=Mon Dec 16 14:25:33 JST 2019, endTime=null, lastUpdated=Mon Dec 16 14:25:33 JST 2019, status=STARTED, exitStatus=exitCode=UNKNOWN;exitDescription=, job=[JobInstance: id=0, version=0, Job=[job1]], jobParameters=[{run.id=1, in=in.csv, out=out.csv}]

14:25:33.319 main INFO (SimpleStepHandler.java:146) handleStep - Executing step: [step]

14:25:33.425 main DEBUG (HogeReader.java:22) <init> - called. in.csv

14:25:33.430 main DEBUG (HogeReader.java:29) read - called. 0

14:25:33.436 main DEBUG (HogeReader.java:29) read - called. 1

14:25:33.442 main DEBUG (HogeProcessor.java:13) <init> - called.

14:25:33.445 main DEBUG (HogeProcessor.java:18) process - called. HogeLine(line=abc,hoge)

14:25:33.446 main DEBUG (HogeProcessor.java:18) process - called. HogeLine(line=def,moge)

14:25:33.462 main DEBUG (HogeReader.java:29) read - called. 2

14:25:33.463 main DEBUG (HogeReader.java:29) read - called. 3

14:25:33.463 main DEBUG (HogeProcessor.java:18) process - called. HogeLine(line=hoge,HOGE!)

14:25:33.467 main INFO (AbstractStep.java:272) execute - Step: [step] executed in 148ms

14:25:33.472 main DEBUG (HogeReader.java:38) close - called.

14:25:33.474 main DEBUG (JobListener.java:22) afterJob - called. JobExecution: id=0, version=1, startTime=Mon Dec 16 14:25:33 JST 2019, endTime=Mon Dec 16 14:25:33 JST 2019, lastUpdated=Mon Dec 16 14:25:33 JST 2019, status=COMPLETED, exitStatus=exitCode=COMPLETED;exitDescription=, job=[JobInstance: id=0, version=0, Job=[job1]], jobParameters=[{run.id=1, in=in.csv, out=out.csv}]

14:25:33.477 main INFO (SimpleJobLauncher.java:149) run - Job: [SimpleJob: [name=job1]] completed with the following parameters: [{run.id=1, in=in.csv, out=out.csv}] and the following status: [COMPLETED] in 169ms

14:25:33.480 main INFO (HikariDataSource.java:350) close - HikariPool-1 - Shutdown initiated...

14:25:33.481 main INFO (HikariDataSource.java:352) close - HikariPool-1 - Shutdown completed.

14:25:33.482 main INFO (HogeApp.java:23) main - exit... 0

※メタテーブルは作成しない&触らないようにしましたが、上記のログからHikariDataSourceが動いています。Pom.xml上もcom.h2databaseの依存を消すと起動でエラーになります。この辺りをなんとかしたかったのですが、…あきらめました。

プロジェクト一式

今回はここまで。